KubeAdm with Local Kubernetes Repo for IPv6

V1.4

Goal

In working with my lazyjack tool to create IPv6 based Kubernetes clusters on bare metal systems, I wanted to run the latest code on master (1.10.0-beta.2 at this time) to run E2E tests and possibly tweak things. So, I needed to be able to run KubeAdm with my own repo, instead of using something prebuilt from upstream.

In addition, I wanted to make sure that I could do some customizing with lazyjack.

Preparation

As described in my blog post on lazyjack, I have a three node, bare-metal setup, with a second interface connected to a physical switch, for the Kubernetes management/pod network. The lazyjack tool is installed on the nodes and I have a config.yaml with the network topology, including all IP addresses desired. Developement tools (go, git, etc) are installed on the node used as the master node.

I’m using Ubuntu 16.04 on each of my systems.

Next, I pulled down the latest Kubernetes code:

mkdir -p ~/go/src/k8s.io cd ~/go/src/k8s.io git clone https://github.com/kubernetes/kubernetes.git cd kubernetes

Now, we are ready to set things up to use this repo, for the Kuberentes cluster.

Steps

Repo Prep

I checked out a branch that had the version I wanted. At the time of this writing, beta 2 of 1.10 was available:

cd ~/go/src/k8s.io/kubernetes git checkout -b release-1.10 origin/release-1.10 git checkout v1.10.0-beta.2

Building/Installing

Next, I built everything and installed kubectl, kubeadm, and kubelet binaries, so that we’re using the latest for everything. Restarted kubelet to get the new version running:

make clean make make release cd _output/bin/ sudo cp kubeadm kubectl kubelet /usr/bin/ sudo systemctl daemon-reload sudo systemctl restart kubelet

I copied these three binaries over to the two minion systems, placed them in /usr/bin, and restarted kubelet to update them as well.

For the release images, they are in TAR files, which can be loaded into docker:

cd ~/go/src/k8s.io/kubernetes/_output/release-images/amd64 for f in *.tar; do docker load -i $f; done

Startup Local Registry

The docker daemon needs to know about an insecure registry that is being created on one of the nodes. Create the following file on each system:

cat > /etc/docker/daemon.json <<EOT

{

"insecure-registries": ["10.86.7.77:5000"]

}

EOT

systemctl restart docker

I guess I could have used the management IP for the master node ([fd00:20::2]), but I decided to use the admin interface IP of the machine on the lab network. In this case, it was 10.86.7.77. You would replace this, with your master node’s IP address.

On the master node, you want to start the local registry:

docker run -d -p 5000:5000 --restart always --name registry registry:2

Tagging images

Note: If there is an easier way than this (like some make target), please let me know…

After making the release and doing a docker load for each tar file, there are a bunch of images in the local registry. We need to tag these images with our master node’s IP and port 5000 (10.86.7.77:5000 in my case). This is a bit complicated, as most of the images built do not have the -amd64 suffix and the tag used has an underscore, which doesn’t play well in the sandbox. Hopefully there is an easier way, but this is what I did…

I first found out the list of images, by doing “docker images” to find out the tag that was created (e.g.v1.10.0-beta.2.17_3d19fe4010c246-dirty). Here’s the list of images:

k8s.gcr.io/hyperkube-amd64

gcr.io/google_containers/kube-apiserver

k8s.gcr.io/kube-apiserver

gcr.io/google_containers/kube-controller-manager

k8s.gcr.io/kube-controller-manager

gcr.io/google_containers/cloud-controller-manager

k8s.gcr.io/cloud-controller-manager

k8s.gcr.io/kube-aggregator

gcr.io/google_containers/kube-aggregator

gcr.io/google_containers/kube-scheduler

k8s.gcr.io/kube-scheduler

gcr.io/google_containers/kube-proxy

k8s.gcr.io/kube-proxy

With that, I could filter by the tag and build up the commands needed to tag these images for my local repo (10.86.7.77:5000). You can see that I had to strip out the registry name to just have the image name part for the local repo tag:

docker images \

--format="docker tag {{.Repository}}:{{.Tag}} 10.86.7.77:5000/{{.Repository}}:v1.10.0-beta.2" | \

grep v1.10.0-beta.2.17_3d19fe4010c246 | \

sed -e "s?5000/gcr.io/google_containers?5000?" | \

sed -e "s?5000/k8s.gcr.io?5000?" > x

chmod 777 x

You can do the same as above, only replace the tag (e.g.v1.10.0-beta.2.17_3d19fe4010c246-dirty) with what you have for a tag. Also, since we need images with the -amd64 suffix, the same command can be tweaked to create those tags too:

docker images \

--format="docker tag {{.Repository}}:{{.Tag}} 10.86.7.77:5000/{{.Repository}}-amd64:v1.10.0-beta.2" | \

grep v1.10.0-beta.2.17_3d19fe4010c246 | \

sed -e "s?5000/gcr.io/google_containers?5000?" | \

sed -e "s?5000/k8s.gcr.io?5000?" > y

chmod 777 y

Before invoking “y”, you need to pull the “hyperkube-amd64” line (first one?), as it already has the right suffix. Now, you can invoke these two files and create all the tags needed. Now, I don’t know if you need the tags in file “x” (other than the hyperkube-amd64), so you could try skipping running that file and just do the first line, along with running file “y”. In my Kubernetes cluster, I think all the images had the -amd64 suffix.

Pushing Images

With everything tagged, you can then push these images to your local repo. I did this command, to first check that I have the right syntax for my tags:

docker images | grep 10.86.7.77:5000 10.86.7.77:5000/hyperkube-amd64 v1.10.0-beta.2 351430a5275d 3 days ago 633 MB 10.86.7.77:5000/kube-apiserver v1.10.0-beta.2 2e8a0bd89199 3 days ago 224 MB 10.86.7.77:5000/kube-apiserver-amd64 v1.10.0-beta.2 2e8a0bd89199 3 days ago 224 MB 10.86.7.77:5000/kube-controller-manager v1.10.0-beta.2 80ea4fb85ccb 3 days ago 147 MB 10.86.7.77:5000/kube-controller-manager-amd64 v1.10.0-beta.2 80ea4fb85ccb 3 days ago 1 ...

If that looks good (registry, image name, and tag), create and invoke the following file in order to push them up:

docker images --format="docker push {{.Repository}}:{{.Tag}}" | \

grep 10.86.7.77:5000 > z

chmod 777 z

./z

Don’t Forget These Images…

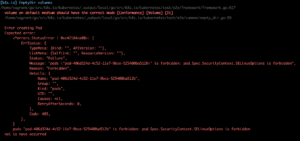

Once I tried this all out, I hit a problem with missing images. It turns out that KubeAdm is using some older versions of etcd (3.2.16) and kube-dns (1.14.8) images. To be sure I had these in my local registry (because everything will come from there), I needed to handle these specially:

docker pull gcr.io/google_containers/etcd-amd64:3.2.16 docker tag gcr.io/google_containers/etcd-amd64:3.2.16 10.86.7.77:5000/etcd-amd64:3.2.16 docker push 10.86.7.77:5000/etcd-amd64:3.2.16 docker tag gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.8 10.86.7.77:5000/k8s-dns-kube-dns-amd64:1.14.8 docker push 10.86.7.77:5000/k8s-dns-kube-dns-amd64:1.14.8 docker tag gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.8 10.86.7.77:5000/k8s-dns-dnsmasq-nanny-amd64:1.14.8 docker push 10.86.7.77:5000/k8s-dns-dnsmasq-nanny-amd64:1.14.8 docker tag gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.8 10.86.7.77:5000/k8s-dns-sidecar-amd64:1.14.8 docker push 10.86.7.77:5000/k8s-dns-sidecar-amd64:1.14.8

As you can see, I didn’t have the etcd image, and had to pull it first. You can check with “docker images” to see if you have to pull any images, before tagging and pushing. Note: For some systems, I’ve had to pull from k8s.gcr.io instead of gcr.io/google_containers.

The versions of etcd and kube-dns that KubeAdm uses are hard coded in the code, so I had to find out by trial and error. Looking at logs, I noticed that these pods were not coming up, and saw what image versions were being tried in the pulls (e.g. 10.86.7.77:5000/etcd-amd64:3.2.16). I would then, do a pull of that version from k8s.gcr.io or gcr.io/google_containers and push it up to my local repo.

Bringing Up The Cluster

To bring things up quickly, I’m using a current release (v1.0.6) of my lazyjack tool, but you can do the same manually, if you’re a masochist :). I installed it in /usr/local/bin so that it is in my path.

I created a config.yaml for my setup to represent the topology and addresses that I wanted to use (see lazyjack README.md for details on this file).

For this effort, we need to customize the generate kubeadm.conf file. As a convenience, I overrode the default work area to point to are area under the account I was using (though you can use the default /tmp/lazyjack/ area, if desired):

general: plugin: bridge work-area: "/home/c2/bare-metal/work-area"

I ran “sudo lazyjack init” to create the certificates needed and place them into the config.yaml file. I copied this updated YAML file to the minion nodes, which also have lazyjack installed.

Next, I ran “sudo lazyjack prepare” on the master, and each of the minion nodes. There should be bind9 and tayga containers running on the master, for the DNS64 and NAT64 servers, respectively. This step will also create a kubeadm.conf file in the work area.

To bring up the cluster with the local repo, we need to edit that file to change the kubernetesVersion line to the version tag we are using (instead of 1.9.0 that the tool created), and add the imageRepository line pointing to our repo. In this example, I used:

kubernetesVersion: v1.10.0-beta.2 imageRepository: 10.86.7.77:5000

Now, on the master, I ran “sudo lazyjack up”. This takes a few minutes, and the output should indicate success and provide the lines to setup kubectl:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run kubectl and make sure that all the pods and services are up and running. Then, you can run the “up” command on the other nodes and check with kubectl that the nodes area “ready” and the additional proxy pods are running.

You should be able to then use your IPv6 based cluster, running code from your local repo!

Issues

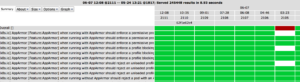

Please be sure to use docker version 17.03, as versions 17.06, 17.09, 17.12, 18.03, and 18.04 are showing that, even with the host enabling IPv6, any containers created have IPv6 disabled. The effect seen is that the kube-dns pod is stuck in “creating” state, and logs show that the CNI plugin is failing to add an IPv6 address to the pod (permission denied error). This is true with any user create pods that use the pod network and need an IPv6 address from the CNI plugin. This is discussed in this CNI issue and in this docker issue.