End-To-End Testing

Updated 6/8/2017

I had been trying to follow the community page on end-to-end testing, but striking out. I gave it a try on native Mac (specifying KUBERNETES_PROVIDER=vagrant), on bare-metal, and inside a Virtual box VM running on a Mac. Each gave me different problems, which I’ll spare elaborating on in this blog. Instead, I’ll cut to the chase and describe what works…

One of the Kubernetes Developers (@ncdc) was kind enough to give me info on a working method, which I’ll elaborate on here.

Preparations

First, though, here is what I have for a setup:

- Mac host (shouldn’t matter).

- CentOS 7 Vagrant box with 40GB drive, running via VirtualBox.

- VM has 8GB RAM and 2 CPUs configured.

- Go 1.8.1 installed and $GOPATH setup. Created ~/go/src/k8s.io as a local work area.

- Tools installed: git, docker, emacs (or your favorite editor).

- Pull of Kubernetes repo from the work area (latest try used commit b77ed78):

- git clone https://github.com/kubernetes/kubernetes.git

Started up the docker daemon with:

sudo systemctl enable docker && sudo systemctl start docker

After trying this whole E2E process on a fresh VM setup, I found that when I tried to run the tests, the ginkgo app was not found in the expected areas under _output/. To remedy this, I did “make”, which builds everything, including ginkgo, and places it in _output/local/go/bin/ginkgo. Maybe there is a way to just build ginkgo, but for now, this works.

Starting The Cluster

From my Kubernetes repo area, ~/go/src/k8s.io/kubernetes, I made sure that etcd was installed and PATH was updated, as suggested:

hack/install-etcd.sh export PATH=$PATH:`pwd`/third_party/etcd

Next, build hyperkube and kubectl:

make WHAT='cmd/hyperkube cmd/kubectl'

You can then start up the cluster, using:

sudo LOG_LEVEL=4 API_HOST=10.0.2.15 ENABLE_RBAC=true -E PATH=$PATH \

./hack/local-up-cluster.sh -o _output/bin/

The API_HOST is set to the node’s main interface, which for a VirtualBox VM is usually 10.0.2.15. If you are running under root account (I haven’t tried that, so YMMV), you won’t need sudo and the “-E PATH=$PATH” clause. Feel free to use a different LOG_LEVEL, if desired, too.

Running Tests

Once everything is up, you’ll get a message on how to use the cluster from another window. So, I opened another terminal window, did “vagrant ssh” to access my VM, and changed to the Kubernetes directory. I did these commands to prepare for a test run:

sudo chown -R vagrant /var/run/kubernetes $HOME/.kube export KUBERNETES_PROVIDER=local export KUBECONFIG=/var/run/kubernetes/admin.kubeconfig

Where my user is “vagrant”. To prevent e2e failures, @ncdc told me to always do the chown command, after stopping and then restarting the cluster.

The cluster can be examined with kubectl script, like “cluster/kubectl.sh get nodes”

Now, you can run the end-to-end tests, with your desired ginkgo.focus. Here is an example:

go run ./hack/e2e.go -- -v -test -test_args '--ginkgo.v --ginkgo.focus Kubectl.expose'

At the end of the run, you’ll see this type of output:

Ran 1 of 631 Specs in 28.812 seconds SUCCESS! -- 1 Passed | 0 Failed | 0 Pending | 630 Skipped PASS Ginkgo ran 1 suite in 29.156205396s Test Suite Passed 2017/05/30 13:40:55 util.go:131: Step './hack/ginkgo-e2e.sh -ginkgo.v -ginkgo.focus Kubectl.expose' finished in 29.254525787s 2017/05/30 13:40:55 e2e.go:80: Done

I ran Conformance tests, with:

go run ./hack/e2e.go -- -v -test -test_args '--ginkgo.v --ginkgo.focus \[Conformance\]'

At the end of the output, I could see the test results:

Ran 148 of 646 Specs in 3493.031 seconds FAIL! -- 127 Passed | 21 Failed | 0 Pending | 498 Skipped --- FAIL: TestE2E (3493.05s)

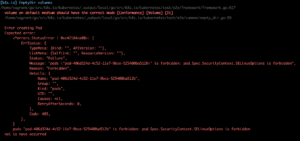

All of these failures were under framework/pods.go and related to Volumes. Not sure what is wrong, but looks like some were failures to create pods due to security context:

Learning How To Focus

The ginkgo.focus argument is a regular expression that maps to the It() clauses in code in test/e2e/*. You cannot use quotes or spaces (so, use \s). For example, if I see that there are test cases that use the word Selector:

git grep Selector | egrep "It[(]"

test/e2e/network_policy.go: It("should enforce policy based on PodSelector [Feature:NetworkPolicy]", func() {

test/e2e/network_policy.go: It("should enforce multiple, stacked policies with overlapping podSelectors [Feature:NetworkPolicy]", func() {

test/e2e/network_policy.go: It("should enforce policy based on NamespaceSelector [Feature:NetworkPolicy]", func() {

test/e2e/scheduling/predicates.go: It("validates that NodeSelector is respected if not matching [Conformance]", func() {

test/e2e/scheduling/predicates.go: It("validates that NodeSelector is respected if matching [Conformance]", func() {

test/e2e/scheduling/predicates.go: It("validates that a pod with an invalid podAffinity is rejected because of the LabelSelectorRequirement is invalid", func() {

I can run the test with:

go run ./hack/e2e.go -- -v -test -test_args '--ginkgo.v --ginkgo.focus Selector'

It shows this near the end of the output:

Summarizing 2 Failures:

[Fail] [k8s.io] NetworkPolicy [It] should enforce policy based on NamespaceSelector [Feature:NetworkPolicy] /home/vagrant/go/src/k8s.io/kubernetes/_output/local/go/src/k8s.io/kubernetes/test/e2e/network_policy.go:356 [Fail] [k8s.io] NetworkPolicy [It] should enforce policy based on PodSelector [Feature:NetworkPolicy] /home/vagrant/go/src/k8s.io/kubernetes/_output/local/go/src/k8s.io/kubernetes/test/e2e/network_policy.go:356 Ran 6 of 646 Specs in 399.804 seconds FAIL! -- 4 Passed | 2 Failed | 0 Pending | 640 Skipped --- FAIL: TestE2E (399.83s)

Being a regular expression, I could refine this to only running the three tests in networking_policy.go. First, I saw that the desired tests were under this section:

var _ = framework.KubeDescribe("NetworkPolicy", func() {

f := framework.NewDefaultFramework("network-policy")

...

It("should enforce policy based on PodSelector [Feature:NetworkPolicy]", func() {

...

I then modified the test to run with this focus:

go run ./hack/e2e.go -- -v -test -test_args '--ginkgo.v --ginkgo.focus NetworkPolicy.*Selector' ... Summarizing 2 Failures: [Fail] [k8s.io] NetworkPolicy [It] should enforce policy based on NamespaceSelector [Feature:NetworkPolicy] /home/vagrant/go/src/k8s.io/kubernetes/_output/local/go/src/k8s.io/kubernetes/test/e2e/network_policy.go:356 [Fail] [k8s.io] NetworkPolicy [It] should enforce policy based on PodSelector [Feature:NetworkPolicy] /home/vagrant/go/src/k8s.io/kubernetes/_output/local/go/src/k8s.io/kubernetes/test/e2e/network_policy.go:356 Ran 3 of 646 Specs in 156.527 seconds FAIL! -- 1 Passed | 2 Failed | 0 Pending | 643 Skipped --- FAIL: TestE2E (156.61s)

There are some example labels that can be used for the focus (and some examples that you could use are on that page as well). Hint: I wouldn’t run the test without any focus set…it takes a really long time.

When you are all done, in the first window, just press control-C to shutdown the cluster. Don’t forget to do the chown command above, if you restart the cluster.

Important Notes

A few things I found out…

Having a large disk drive will be important, as it is not easily resizable with Vagrant. I found that 40 GB was more than enough. Some vagrant boxes are only 20GB, and I’ve run out of space after using it for a while.

Be sure when you run the test, that you have the -v option after the double dash, or specify it inside the test_args string.

If you are changing your code, and then want to retest, you can run make for just cmd/hyperkube, and then re-run local-up-cluster.sh. Hyperkube is an all-in-one binary with kube-apiproxy, kubelet, kube-scheduler, kube-controller-manager, and kube-proxy.

You can use this setup for development work, although you’ll likely want to include additional tools, and maybe even play with kubeadm.

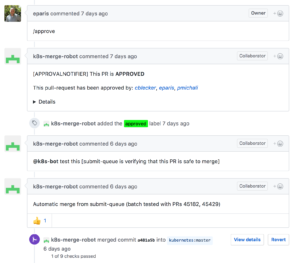

As of 5/31/2017, there is a bug in the tests that is preventing kubelet from starting. The fix is being worked under PR 46709. In the meantime though, you can start up the cluster with this:

sudo FEATURE_GATES=AllAlpha=false LOG_LEVEL=4 API_HOST=10.0.2.15 ENABLE_RBAC=true -E PATH=$PATH ./hack/local-up-cluster.sh -o _output/bin/

UPDATE: On 6/8/2017, I pulled the latest kubernetes and didn’t have to use this temp fix, so the change is upstreamed now.