Major Viewmaster Refactoring

V3 on 05-15-2025

With the Viewmaster movie library, I had noticed that there are over 600 titles, and when displaying in “detail” mode, it was loading all those cover URLs. The thought was that maybe I could save the cover images locally, when movies are entered into the database, and I could load the existing ones via a migration.

I created a new table for the cover URLs and files, and realized that there was quite a bit of duplication in the database, and thought that I should try to normalize the data. Through the process of making new tables and trying to migrate, I uncovered several other issues.

Long story short, I ended up undoing everything, and then designing a new table with migration to address the issues and to try to minimize duplicate information in the database. In summary, these are the concerns/issues I found out in the process:

- IMDB information was duplicated from both TV movie series, multi-disk sets that happened to have the same IMDB info, and single movies where I had multiple formats (DVD, BR, 4K). I wanted to separate out the “shared” IMDB info to reduce duplication.

- For the TV series and multi-disk sets, the recorded duration in IMDB was wrong, w.r.t. each disk (e.g. duration, release date), and often the titles needed to be different (indicating the season number or specific disk). This meant that some IMDB info needed to be “overridden”.

- I found a few movies where the IMDB did not have a cover URL, so I needed to create a dummy cover that could be used. Likewise, I hit one specialty disk, where there was no IMDB entry at all.

- The IMDB would provide multiple genres for most movies, and my database was setup for just having one. In addition, some of the genres were spelled differently (e.g. SCIENCE FICTION vs SCI-FI, WAR vs MILITARY) and some were not in my lists of genres (e.g. SPORTS). Regardless of what I chose, I would like to know what choices were recommended by IMDB.

- Since IMDB was shared among movies, I needed to remove the database entry and the cover file, when the IMDB info was no longer used.

- By the end of the process, I did notice a few problems with performance, so I’ll have some follow-up work to try to fix that.

The Plan

Since there were lots of changes, I planned on bumping the version from 0.2.3 to 0.3.0. Here is the overall pan of attack that I had.

- Define a new table (ImdbInfo) that contained just the IMDB information that I did not expect to change, even on shared disks. The IMDB number, plot, actors, directors, cover URL and file.

- Because movies could override some IMDB info, I wanted to have the “original” stored in the new table, but keep modifiable copies in the Movie table. This includes duration, release date, rating, and title.

- The Movie table would still have disk specific info, like format, aspect ratio, audio, cost, good/bad indication, collection name (if applicable), and paid/gift indication.

- The Movie table would still have the selected genre (I had called it ‘category’), but the ImdbInfo table would contain the recommended genres for the IMDB title.

- As part of the migration process to add the new table, I would also download and save any cover files to the Persistent Volume used for static files.

You can clone the v0.3.0 code from GitHub to see all the changes from v0.2.3, and latest content.

Table Definition

Here is the new table definition for the IMDB information:

class ImdbInfo(models.Model): """IMDB information for a movie (or series of movies).""" title_name = models.CharField( max_length=60, help_text="Up to 60 characters for title. May be overridden." ) release_date = models.IntegerField( help_text="Four digit year of release. May be overridden." ) genres = models.CharField(help_text="List of genres applicable to the movie.") mpaa_rating = models.CharField( max_length=5, default="?", choices=RATING_CHOICES, help_text="Select the MPAA rating. May be overridden.", ) run_time = models.TimeField( help_text="Duration in hh:mm format. May be overridden." ) # These will be common to every movie with this IMDB # identifier = models.CharField( max_length=20, unique=True, help_text="IMDB movie ID." ) plot = models.CharField(blank=True, default="", help_text="Plot summary.") actors = models.CharField(blank=True, default="", help_text="Top cast.") directors = models.CharField(blank=True, default="", help_text="Director(s).") cover_url = models.URLField( blank=True, default="", help_text="URL where poster image is located." ) cover_file = models.ImageField( blank=True, null=True, upload_to="covers", storage=FileSystemStorage(allow_overwrite=True), )

Some important things to note here. First, the fields that will remain in the Movie table, use different names. This is because multiple forms will be used at movie creation/edit and we need to be able to uniquely address the fields (there is no discriminator based on the form. The sizes and types of the fields are the same.

Second, for the cover file, I selected file system storage to allow overwriting. Otherwise, if you save a cover file with the same name, it will create a new file with a suffix. I saw that when first dealing with multiple disk sets, before I resolved how to ensure only one IMDB for them.

Migration

In the several failed attempts at doing this change, I realized that there were several movie genre types that I needed to translated to what I had, and I wanted to add the “SPORTS” genre. Before creating the new table definition, I modified the CATEGORY_CHOICES dict and then ran ‘python manage.py makemigrations’ command to create the migration file to update the table. I ran “migrate” to affect the change to the database (no change to existing entries).

With that out of the way, I then build the needed new table definition and ran “makemigrations” again to create a file to add that table, and alter the Movie table to have a ForeignKey to the new ImdbInfo table. Then, I created custom code in the migration file, to do data migration. This was added as another command, after the table changes were done:

migrations.RunPython(copy_imdb_info, migrations.RunPython.noop),

On a “migrate” it would run the code in copy_imdb_info(), and in a reverse migration, it wouldn’t do anything, and would proceed to remove the new table and the added ForeignKey in the Movie table.

For copy_imdb_info(), the code cycles through all the entries in the current Movie table.

For each movie, the IMDB ID would be identified, and if this was the first time encountered, a new ImdbInfo entry created. All the data from the entry would be obtained via API request (versus copying from the Movie, which may have customized info). Existing extraction functions were used to obtain the info, and the cover file would be stored locally. Lastly, the ID of the new or existing ImdbInfo entry would be stored in the Movie table’s new ForeignKey field, imdb_info.

For the movie genres, I had updated the list of genres, based on some new ones that I thought I’d want. Then, for the list of genres provided by IMDB (a comma separated string), I used a new filter_genres() function to extract each one, convert them to ones that matched the list that I had (renaming/substituting as needed), and then would re-build a comma separated string of the genres for storage in the new table.

Other Changes

With the database migrated (and we still have the movies with original data), I proceeded to change the code to use the new tables.

For the movie listing, the “show details” option now would show some information from the Movie table and some from the ImdbInfo table. I created a custom template tag that would return the URI for the cover. This would use one of the following, in order… the cover file, if available, the cover URL, if available, a static image indicating there was no cover, if the cover was not valid, or a static image indicating there was no IMDB info for the movie. It would display a red line around the cover, if it was using the cover via a URL and not local file.

For create/edit of movies, we would now have two forms. One for the movie, and one for the IMDB info. Several of the “shared” fields of the IMDB info, would be hidden fields, so that they are passed along.

On “GET” portion of create/edit, if there was an IMDB ID selected (via find, for create, or from the movie for edit), we would query the IMDB and populate the ImdbInfo form with the data. For the genres, if there is IMDB info, we build a list of those entries, followed by all the rest of the possible genres, separated by a dashed line. If there is no IMDB info, the full list of possible genres is displayed. If this was an edit of a movie, the previously selected value would be the default genre.

On “POST” portion of create/edit, there are four phases. First, we the get the movie form, movie identifier (or “0” if new). Second, we check to see if the user has requested to “clear” the IMDB info, otherwise we try to get the IMDB identifer (or “unknown” if there is none). Third, we get the ImdbInfo form data (could be an existing entry, new entry, or none).

Lastly, we validate and save. There are several cases that can occur, and different actions required:

|

Case |

Action |

| There is no IMDB identifier specified. | If there was an existing movie, with IMDB info, note the entry |

| New IMDB info and info is valid | Save new ImdbInfo entry. |

| New IMDB info and info is invalid | Show form again, along with field errors |

| Existing IMDB info, but no cover file | Save the cover file locally |

| Existing IMDB info with cover file | No additional actions |

| Movie info is not valid | Show form again, along with field errors |

The last step will be to save the movie, associating it with the corresponding IMDB info (or None), and then, if there was a previous IMDB info, check to see if it is referenced any more, and if not, delete the entry and remove the cover file.

In the GET processing and the form template, any differences in the shared IMDB settings that a movie may have overridden, are noted and displayed in the form, so that one can see when a movie has modified what was in the IMDB info. The field will have a red box border, to show that it was overridden. This applies to the release date, rating, and duration. The title is not highlighted.

There was an issue with displaying movies by genre/date/title, and using case insensitive filtering, so they were corrected as well.

One thing to keep in mind is that, when switching between running in development mode and running in production mode, we are sharing the same database, so it is up to date. However, when creating cover files, they are stored in the PVC for production and on my development machine for development and can be out of sync.

I did the migration on my development machine, and did most testing there, and then copied the cover files to the running pod. For example, with a current app pod viewmaster-579bd8d869-ph4nq, I would do:

kubectl cp public/media/covers/* \

viewmaster/viewmaster-579bd8d869-ph4nq:/vol/web/media/covers/

The “viewmaster/” prefix on the pod name is the namespace.

Deployment Changes

During this process, I was having problems serving up the cover files, which were stored in the MEDIA_ROOT (an area in the project tree for development, and in /vol/web/ for the production pod).

I had done some AI queries, and found out a few things. First, I had MEDIA_URL set to “media/” and it should have been “/media/” so that it is an absolute path. There was the same issue with the current STATIC_URL.

Second, when running in production (debug=False), Django does have the static clause for URL patterns that can be used for mapping URIs to the media area. It also sounded like Django and gunicorn (used for production pod), are not very good at serving up static files and that one should use NGINX (or equivalent), which is better suited.

I used the AI prompting to guide me through how to modify my deployment so that the pod would now have both my Django container AND an NGINX container. Requests coming in, for content, would get dispatched to gunicorn and the Django app, and requests for static/media files would be handled directly by NGINX.

This required modifying the deployment YAML to include NGINX and reference the same PV for config and static files, change the service to use port 80 and redirect to port 8080 for gunicorn, and providing a NGINX config YAML. I ended up breaking up django.yaml into parts, of which, to update my deployment, I would now run:

kubectl apply -f nginx-config.yaml

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

This is assuming that the “viewmaster” namespace was already created and I used it to modify the deployment. Obviously, I had rebuilt the app, with version changed to 0.3.0 (and updated the hard-coded version in movie-list.html), and pushed up the change.

A side (benefit) was that the app is accessible in production by the LoadBalancer IP, without using a port.

There was a pvc.yaml created as well, since the PVC creation was removed from the deployment.yaml. I already have the PVC, so it didn’t need to be used.

Of note, the deployment.yaml will do a workingDir command to change directory, and then invoke gunicorn with the desired arguments. The Dockerfile still does that same operation, for the case when we are in development mode and running locally (using Django’s server).

In urls.py, the following was added to the end of urlpatterns…

] + static(settings.MEDIA_URL, document_root=settings.MEDIA_ROOT)

This allows the media area to be available, when running in development mode with debug=True.

I also tweaked the helper scripts as I needed to specify to use the “app” container, and not the “nginx” container.

Testing

I’ve been able to verify this working both in development and with production. Making sure that cover files are generated on create (or edit, if missing), and deleted when no longer used. In both the list and create/edit forms, I made sure that a red border was drawn around covers that were using the URL and not the file.

Follow-Up Changes

I did a few more changes and tagged v0.3.1. For the create/edit form, if there is a cover file, it is used, instead of always using a URL there. Like the movie list, when a cover URL is used, a red border is drawn around the cover. The create/edit also will display the “no IMDB” and “no image” covers for movies that do not have IMDB info, and movies that do not have a valid cover URL, respectively.

For clean-up, I removed any references to the fields in the Movie model that are now only used in the ImdbInfo model. Then, another migration (0011) was added, that would remove the plot, actors, directors, cover_ref, and movie_id fields from the Movie model. A reverse migration was include to restore the contents of these fields, when going back to version 0010. I tested migrating to 0011 and verified that the app worked, and then reverse migrated to version 0010 and made sure the fields were repopulated in the Movie table.

Are We Done Yet?

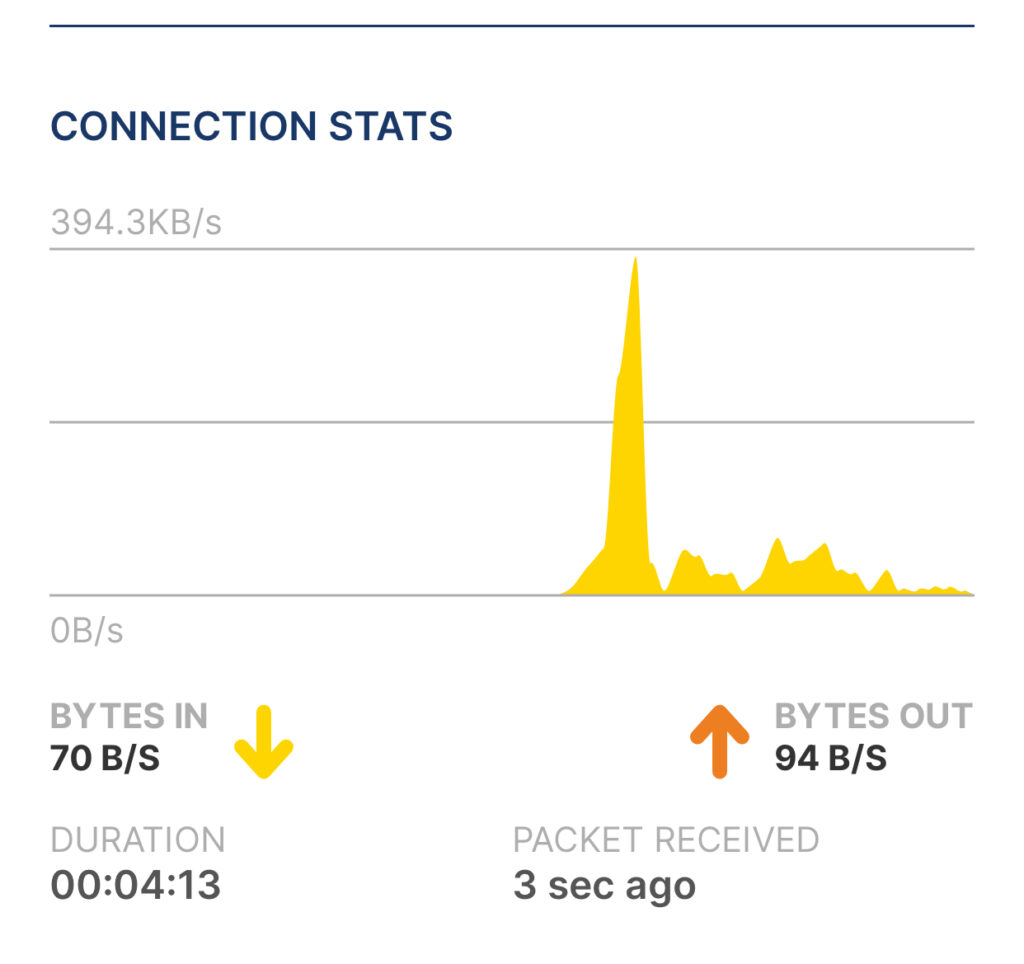

Nope! Ever since I did this split of tables and using cover files, the movie listing page was loading VERY slowly, taking 4-6 seconds. I tried some performance mods, like lazy loading of images, setting up NGINX to cache static and media files, but it still was better, but still horrible. I temporarily altered the code that checked for cover file, and just unconditionally used the cover URL, and there was no change.

Using the Chrome Lighthouse performance measuring, I finally realized that it is not the cover images being served locally (or the use of CDN for bootstrap CSS and JS), but the actual page processing. I saw these stats:

| Duration | 4.4 s |

| Queuing and connecting | 1.42 ms |

| Request sent and waiting | 4.36 s |

| Content downloading | 31.36 ms |

| Waiting on main thread | 48.17 ms |

AI suggested to look at SQL queries, view and template profiling, to see why the server took so long to respond,a nd recommended to install Django Debug Toolbar, and do some profiling to see what was up. I did that, and found that there was a HUGE amount of time doing SQL queries and there were some repeatedly done, during the movie listing.

I looked at the queries done, along with their reference to the code location, and, after doing queries for the count of movies, cost per format, and count of paid movies, it did a query for all movies, ordered by title, as was selected. But, then I was seeing queries for ImdbInfo entries, by ID, and that was repeated by the number of movies (659 times), and was done numerous times. It looked like this was in the template code for the movie listing.

I asked AI for what I suspected, and sure enough, in the view it does a query of movies for the form, for example:

movies = Movie.objects

if mode == "alpha":

movies = movies.order_by(Lower("title"))

elif mode == "cat_alpha":

And then in the template, I use:

<div class="col-lg">{{ movie.rating }}</div>

<div class="col-lg-5">STARS: {% actors movie.imdb_info %}</div>

Where the actors custom template tag will just reference the field, or provide a default value. These references of imdb_info field will cause addition (N+1) queries are being done.

The solution is to use select_related() in the query, so I now have:

movies = Movie.objects.select_related('imdb_info')

Now, instead of having 665 queries in 6909.86 mS, we have 6 queries in 177.14 mS! Working well now.

To summarize the changes made (tagged at v0.3.2) are:

- Fixed database query, when using new ImdbInfo table

- Added NGINX config to improve performance of static and media file access

- Added Django Debug Toolbox for development analysis.

- Providing bootstrap CSS & JS 5.3.0 locally for performance.

- Corrected Javascript handling of select pull-down to work in Chrome.

Whew!

Future?

Here are the things I may want to work on…

- Turn on HTTPS, certs, and add OTP to be able to access remotely? (Could setup HTTP/2 as well)

- Consider pagination for the movie listing, for better responsiveness. Though not bad currently.

- Use some of these performance improvement techniques, Django Debug Toolbox, and GitLeaks (tool to ensure confidential info is not stored in Git) for other web apps that I have made.